The architecture of an FPGA deep learning process accelerator is a compelling solution to several key challenges facing the field. Fast CNN inference, cost of training, and memory requirements are a few of the topics covered in this article. In addition, this framework depends on architecture to reduce the costs of training and transferring data.

Fast CNN inference

An accelerator that accelerates CNN inference is the key to accelerating FPGA deep learning models. The accelerator consists of several building block modules and handles data flow between them. It stores the feature and weight blocks in the input stage and send them to the PEs. The accelerator reads data from off-chip memory and computes layers in the output stage. The results then stream to the external memory.

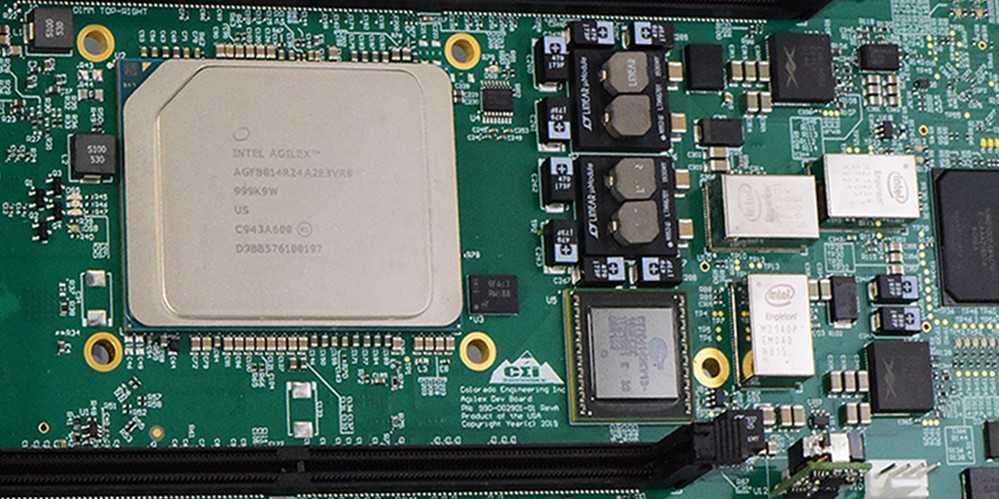

An FPGA-based accelerator offers better scalability and low latency than an ASIC-based accelerator. An FPGA-based accelerator also requires less power and a lower overall system cost. Moreover, it is flexible and allows Rayming PCB & Assembly to configure the number of parallel multipliers they need to accelerate CNN inference. In addition, users can configure the number of multipliers, which all convolution layers share.

The accelerator contains the following components: REGISTER_BANK, MEMORY_SYSTEM, and FSM. The MEMORY_SYSTEM comprises four Block RAMs. RAM_1 stores weights, while RAM_2 and RAM_3 store biases. RAM_4 stores intermediate values. The final output of the CNN is read from the on-chip memory and compared to the validation data.

Besides the FPGA-based accelerator, it has two on-chip RAMs that intermediate store results and pixel results. These RAMs are ideal for fast CNN inference. The M20K RAMs can store intermediate results. They are also suitable for pixel-scale data and a low power footprint. As a result, the power consumption of the AlexNet accelerator is similar to prior accelerators.

The accelerator is a pipelined architecture that executes multiple stages of computation in parallel on different data. It also uses synchronized registers to decouple these stages. As the size of the activation increases, the number of blocks processed per PE increases as well. Therefore, a fast CNN accelerator is critical to a high-speed, high-volume machine learning system.

Cost of training

There are various ways to accelerate FPGA deep learning, but no single approach is efficient for every workload. Custom ASICs are the fastest and most cost-effective way to accelerate deep learning, and they can work with modern frameworks. However, some non-FPGA approaches are less efficient and more expensive.

While general-purpose processors are great for general-purpose computing, they are not ideal for GPU-based deep learning. As a result, FPGA-based systems are more efficient than GPU-based models. Unlike general-purpose processors, FPGAs can be suitable for both latency and throughput. This method is not limited to FPGA deep learning; other neural network architectures can learn from the same hardware.

The main FPGA-based inference engine consists of an Arria 10 GX FYI (moderate-sized) FPGA fabricated with 20-nanometer process technology. The FPGA uses a Dell server equipped with an Intel Gold 6130 Skylake CPU. The Skylake CPU has sixteen cores per socket and two threads per core. To compare the performance of the two FPGAs, we will use different configurations of Intel Skylake CPUs.

The full cost of the accelerator includes the constant part of the formal shell resource overhead and the dynamic aspect of the accelerator architecture. For example, different target platforms have different base infrastructures for network connections, memory interfaces, and host interfaces. As a result, the FINN-R platform supports a wide variety of different platforms.

The price of deep learning process accelerator training on an FPGA can be significantly lower than that of a GPU-based solution. The FPGA implementation of CNNs is also easier to parallelize, resulting in lower hardware resource costs. However, the higher the arithmetic intensity, the lower the overall cost. In addition, the complexity of the accelerator will grow proportional to the bandwidths of both factors.

Neural network architectures

A Deep Learning Process Accelerator based on FPGA has been an efficient tool for accelerating the FPGA deep learning process. The underlying FPGA architecture allows for integrating scalable CNN modules into an application’s processing flow. The accelerator is composed of computation modules and on-chip memory. The on-chip memory stores intermediate and final results of CNNs. It uses a custom DMA configuration module that controls mSGDMA behavior and writes instructions with varying transfer sizes.

It is important to note that the FPGA platform can help to build custom-designed CNN accelerators, but this is not necessary for a deep learning application. Furthermore, while custom-designed CNN accelerators are desirable, they require considerable development time and cannot keep pace with the evolving CNN algorithms and applications.

The most common method for building a Deep Learning Process Accelerator is using a programmable hardware device. An FPGA-based accelerator can increase throughput by computing multiple batches of images. However, the processing time is relatively high, which makes it an unsuitable candidate for applications where latency is an essential factor. Various hardware accelerators can overcome these drawbacks, including FPGA-based CNN accelerators. The main benefits of FPGA-based CNN Accelerators include high reconfigurability, fast turn-time, and better performance than ASICs. The system can also be more energy-efficient compared to a GPU-based CNN accelerator.

A Deep Learning Process Accelerator can accelerate the convolutional neural networks. Its architecture allows it to accelerate convolutional neural networks. The number of parallel multipliers constrains the hardware resources used.

The architecture of the fpgaConvNet framework

The fpgaConvNet framework is a powerful architecture for FPGA deep learning process accelerators. This framework supports a broad range of network types, including compound modules and conventional ConvNets. As a result, it can accelerate the learning process by up to 1.5x. In addition, this architecture can meet specific application requirements. For example, it supports CNN-based tasks in self-driving cars and UAVs.

The fpgaConvNet framework uses a Synchronous Dataflow (SDF) modeling core to generate high-throughput hardware mappings. High-throughput applications enable batch processing and allow for optimization opportunities. The fpgaConvNet framework also supports exploring a wide range of architectural design spaces. This flexibility allows for it to map both shallow and dense networks.

The CNN architecture involves an on-chip memory and an external DDR3 memory. The mSGDMA IP is used to handle small and non-continuous data movement efficiently. It also has a custom DMA configuration module that controls the behavior of the mSGDMA by writing instructions with variable transfer sizes. The custom DMA configuration module also generates a start signal for the FC and CONV modules.

The inner-product layer is responsible for MAC operations. The FC module shares a multiplier bank with the CONV modules and owns adders shared across all fully connected layers. Parallel MAC operations compute multiple output pixels simultaneously so that a single input pixel can work with several rows of the kernel weight matrix. This approach eliminates the need for parallel adder trees.

The dataflow architecture is fully rate balanced. This architecture enables all engines to produce data in a similar order. It also reduces latency by eliminating buffers between the layers. The engines start computing as soon as the previous one finishes creating output.